I was doing a deep dive read of supported IKEv2 ciphers on GCP native VPNs today and thought I’d setup a quick lab to see which settings would provide best throughput. Lab setup was as follows:

- Palo Alto VM-300 on m4.xlarge in us-east-2 (Ohio)

- IKEv2 VPN to GCP us-east4 (N. Virginia)

- Latency is a steady 13ms round trip time

- AWS side test instance is t3.xlarge (4 vCPU / 16 GB RAM)

- GCP side test instance is e2-standard-4 (4 vCPU / 16 GB RAM)

- Both VMs running Ubuntu Linux 18.04.4

- File is 500 MB binary file transfer via SCP

Throughput speeds (in Mbps) using DH Group 14 (2048-bit) PFS:

| Encryption / Hash | SHA-512 | SHA-256 | SHA-1 |

| AES-GCM 256-bit | 664 | 668 | 672 |

| AES-GCM 128-bit | 648 | 680 | 704 |

| AES-CBC 256-bit | 510 | 516 | 616 |

| AES-CBC 192-bit | 492 | 523 | 624 |

| AES-CBC 128-bit | 494 | 573 | 658 |

Throughput speeds (in Mbps) using DH Group 5 (1536-bit) PFS:

| Encryption / Hash | SHA-512 | SHA-256 | SHA-1 |

| AES-GCM 256-bit | 700 | 557 | 571 |

| AES-GCM 128-bit | 660 | 676 | 616 |

| AES-CBC 256-bit | 464 | 448 | 656 |

| AES-CBC 192-bit | 595 | 528 | 464 |

| AES-CBC 128-bit | 605 | 484 | 587 |

Throughput speeds (in Mbps) using DH Group 2 (1024-bit) PFS:

| Encryption / Hash | SHA-512 | SHA-256 | SHA-1 |

| AES-GCM 256-bit | 680 | 626 | 635 |

| AES-GCM 128-bit | 672 | 664 | 680 |

| AES-CBC 256-bit | 584 | 452 | 664 |

| AES-CBC 192-bit | 536 | 520 | 664 |

| AES-CBC 128-bit | 528 | 502 | 656 |

Key Takeaways

GCP will prefer AES-CBC in their negotiations, but AES-GCM provides roughly 25% better throughput. So if throughput is paramount, be sure to have only AES-GCM in the IPSec profile.

If using AES-CBC, SHA-1, while deprecated, is 13% faster than SHA-256 and 25% faster than SHA-512. Since SAs are rebuilt every 3 hours, cracking isn’t as large a concern as in typical SHA-1 use cases.

DH Group does not affect speeds. May as well use the strongest mutually supported value, which is Group 14 (2048-bit). GCP does not support Elliptic Curve (Groups 19-21) so these couldn’t be tested. I would expect faster SA build times, but no change in transfer speeds.

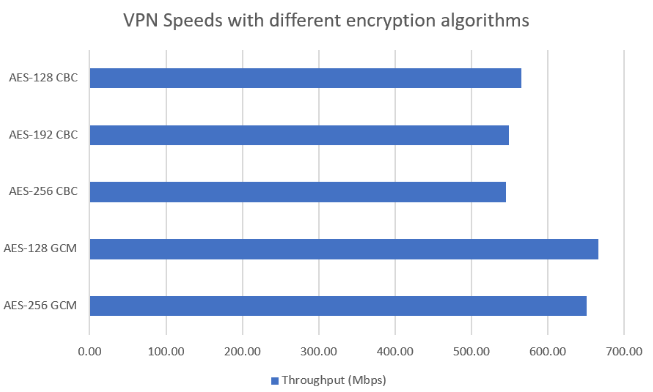

Assuming SHA-256 and Group 14 PFS, this graph summarizes the results: